Chapter 2 Modeling Process

Much like EDA, the ML process is very iterative and heurstic-based. With minimal knowledge of the problem or data at hand, it is difficult to know which ML method will perform best. This is known as the no free lunch theorem for ML (Wolpert 1996). Consequently, it is common for many ML approaches to be applied, evaluated, and modified before a final, optimal model can be determined. Performing this process correctly provides great confidence in our outcomes. If not, the results will be useless and, potentially, damaging.1

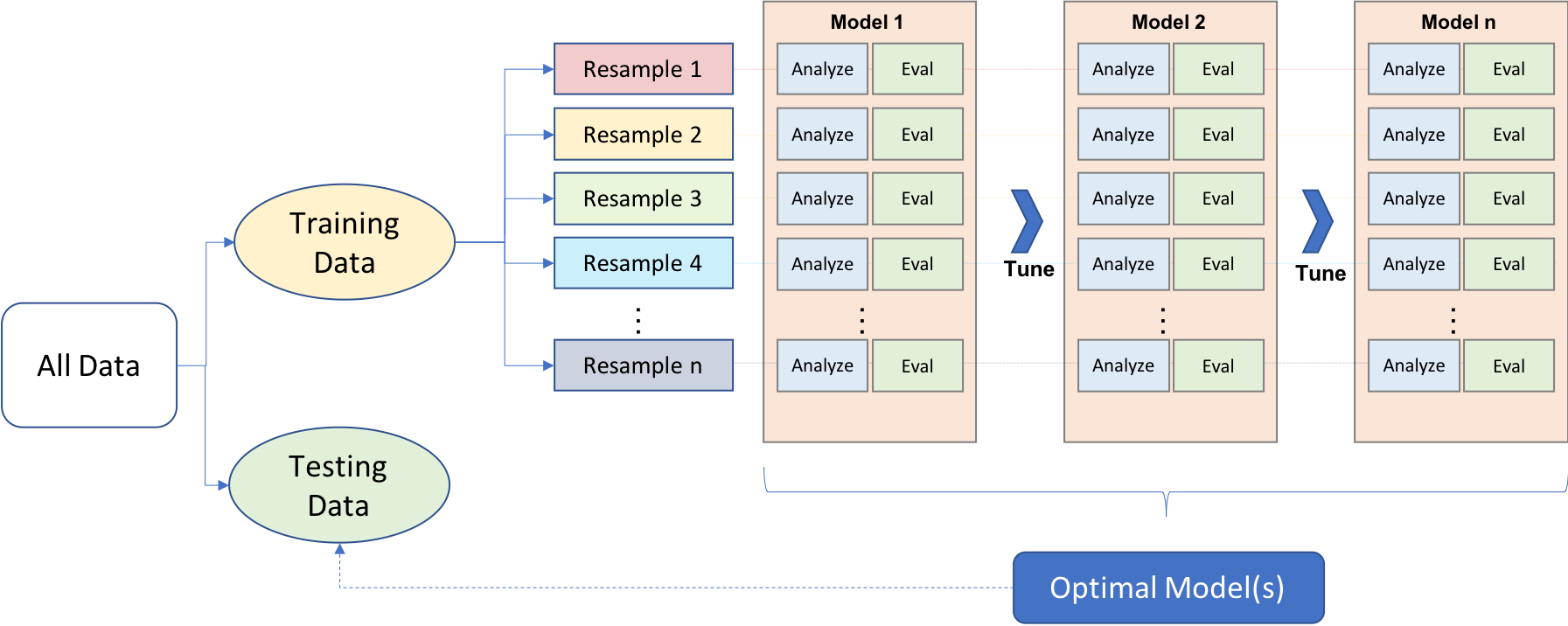

Approaching ML modeling correctly means approaching it strategically by spending our data wisely on learning and validation procedures, properly pre-processing the feature and target variables, minimizing data leakage (Section 3.8.2), tuning hyperparameters, and assessing model performance. Many books and courses portray the modeling process as a short sprint. A better analogy would be a marathon where many iterations of these steps are repeated before eventually finding the final optimal model. This process is illustrated in Figure 2.1. Before introducing specific algorithms, this chapter, and the next, introduce concepts that are fundamental to the ML modeling process and that you’ll see briskly covered in future modeling chapters.

Although the discussions in this chapter focus on supervised ML modeling, many of the topics also apply to unsupervised methods.

Figure 2.1: General predictive machine learning process.

2.1 Prerequisites

This chapter leverages the following packages.

# Helper packages

library(dplyr) # for data manipulation

library(ggplot2) # for awesome graphics

# Modeling process packages

library(rsample) # for resampling procedures

library(caret) # for resampling and model training

library(h2o) # for resampling and model training

# h2o set-up

h2o.no_progress() # turn off h2o progress bars

h2o.init() # launch h2oTo illustrate some of the concepts, we’ll use the Ames Housing and employee attrition data sets introduced in Chapter 1. Throughout this book, we’ll demonstrate approaches with ordinary R data frames. However, since many of the supervised machine learning chapters leverage the h2o package, we’ll also show how to do some of the tasks with H2O objects. You can convert any R data frame to an H2O object (i.e., import it to the H2O cloud) easily with as.h2o(<my-data-frame>).

If you try to convert the original rsample::attrition data set to an H2O object an error will occur. This is because several variables are ordered factors and H2O has no way of handling this data type. Consequently, you must convert any ordered factors to unordered; see ?base::ordered for details.

# Ames housing data

ames <- AmesHousing::make_ames()

ames.h2o <- as.h2o(ames)

# Job attrition data

churn <- rsample::attrition %>%

mutate_if(is.ordered, .funs = factor, ordered = FALSE)

churn.h2o <- as.h2o(churn)2.2 Data splitting

A major goal of the machine learning process is to find an algorithm \(f\left(X\right)\) that most accurately predicts future values (\(\hat{Y}\)) based on a set of features (\(X\)). In other words, we want an algorithm that not only fits well to our past data, but more importantly, one that predicts a future outcome accurately. This is called the generalizability of our algorithm. How we “spend” our data will help us understand how well our algorithm generalizes to unseen data.

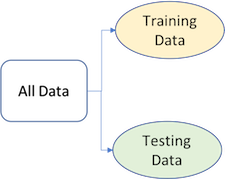

To provide an accurate understanding of the generalizability of our final optimal model, we can split our data into training and test data sets:

- Training set: these data are used to develop feature sets, train our algorithms, tune hyperparameters, compare models, and all of the other activities required to choose a final model (e.g., the model we want to put into production).

- Test set: having chosen a final model, these data are used to estimate an unbiased assessment of the model’s performance, which we refer to as the generalization error.

It is critical that the test set not be used prior to selecting your final model. Assessing results on the test set prior to final model selection biases the model selection process since the testing data will have become part of the model development process.

Figure 2.2: Splitting data into training and test sets.

Given a fixed amount of data, typical recommendations for splitting your data into training-test splits include 60% (training)–40% (testing), 70%–30%, or 80%–20%. Generally speaking, these are appropriate guidelines to follow; however, it is good to keep the following points in mind:

- Spending too much in training (e.g., \(>80\%\)) won’t allow us to get a good assessment of predictive performance. We may find a model that fits the training data very well, but is not generalizable (overfitting).

- Sometimes too much spent in testing (\(>40\%\)) won’t allow us to get a good assessment of model parameters.

Other factors should also influence the allocation proportions. For example, very large training sets (e.g., \(n > 100\texttt{K}\)) often result in only marginal gains compared to smaller sample sizes. Consequently, you may use a smaller training sample to increase computation speed (e.g., models built on larger training sets often take longer to score new data sets in production). In contrast, as \(p \geq n\) (where \(p\) represents the number of features), larger samples sizes are often required to identify consistent signals in the features.

The two most common ways of splitting data include simple random sampling and stratified sampling.

2.2.1 Simple random sampling

The simplest way to split the data into training and test sets is to take a simple random sample. This does not control for any data attributes, such as the distribution of your response variable (\(Y\)). There are multiple ways to split our data in R. Here we show four options to produce a 70–30 split in the Ames housing data:

Sampling is a random process so setting the random number generator with a common seed allows for reproducible results. Throughout this book we’ll often use the seed 123 for reproducibility but the number itself has no special meaning.

# Using base R

set.seed(123) # for reproducibility

index_1 <- sample(1:nrow(ames), round(nrow(ames) * 0.7))

train_1 <- ames[index_1, ]

test_1 <- ames[-index_1, ]

# Using caret package

set.seed(123) # for reproducibility

index_2 <- createDataPartition(ames$Sale_Price, p = 0.7,

list = FALSE)

train_2 <- ames[index_2, ]

test_2 <- ames[-index_2, ]

# Using rsample package

set.seed(123) # for reproducibility

split_1 <- initial_split(ames, prop = 0.7)

train_3 <- training(split_1)

test_3 <- testing(split_1)

# Using h2o package

split_2 <- h2o.splitFrame(ames.h2o, ratios = 0.7,

seed = 123)

train_4 <- split_2[[1]]

test_4 <- split_2[[2]]With sufficient sample size, this sampling approach will typically result in a similar distribution of \(Y\) (e.g., Sale_Price in the ames data) between your training and test sets, as illustrated below.

Figure 2.3: Training (black) vs. test (red) response distribution.

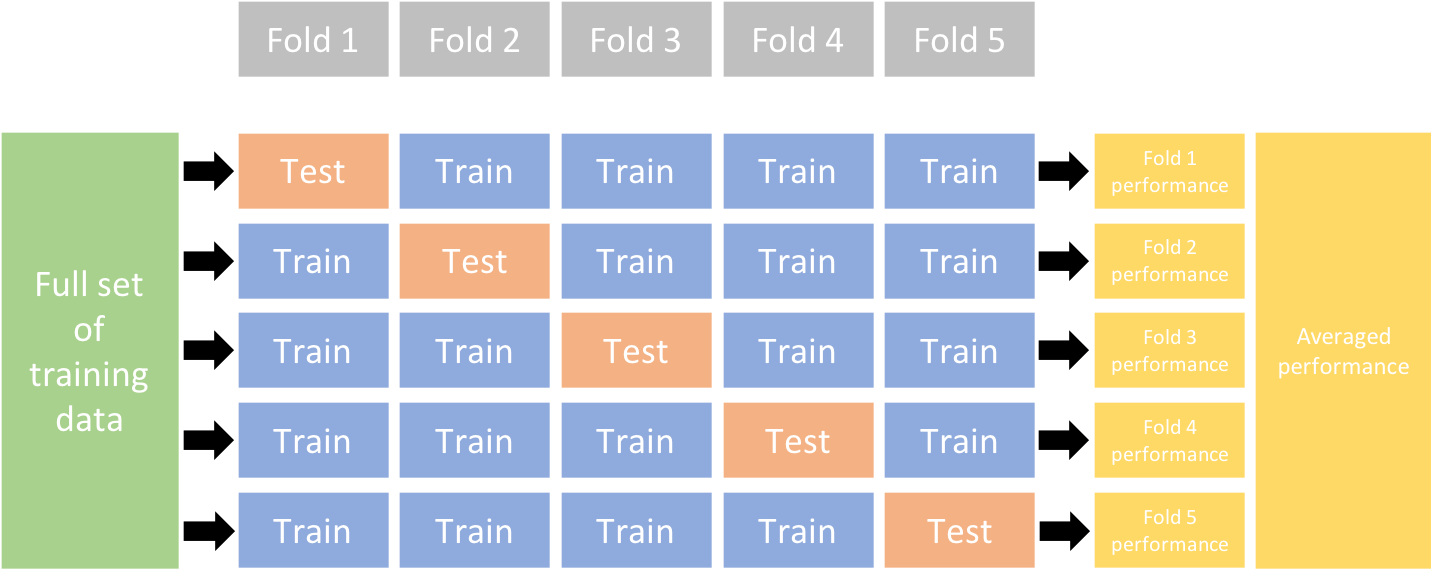

2.2.2 Stratified sampling

If we want to explicitly control the sampling so that our training and test sets have similar \(Y\) distributions, we can use stratified sampling. This is more common with classification problems where the response variable may be severely imbalanced (e.g., 90% of observations with response “Yes” and 10% with response “No”). However, we can also apply stratified sampling to regression problems for data sets that have a small sample size and where the response variable deviates strongly from normality (i.e., positively skewed like Sale_Price). With a continuous response variable, stratified sampling will segment \(Y\) into quantiles and randomly sample from each. Consequently, this will help ensure a balanced representation of the response distribution in both the training and test sets.

The easiest way to perform stratified sampling on a response variable is to use the rsample package, where you specify the response variable to stratafy. The following illustrates that in our original employee attrition data we have an imbalanced response (No: 84%, Yes: 16%). By enforcing stratified sampling, both our training and testing sets have approximately equal response distributions.

# orginal response distribution

table(churn$Attrition) %>% prop.table()

##

## No Yes

## 0.8387755 0.1612245

# stratified sampling with the rsample package

set.seed(123)

split_strat <- initial_split(churn, prop = 0.7,

strata = "Attrition")

train_strat <- training(split_strat)

test_strat <- testing(split_strat)

# consistent response ratio between train & test

table(train_strat$Attrition) %>% prop.table()

##

## No Yes

## 0.838835 0.161165

table(test_strat$Attrition) %>% prop.table()

##

## No Yes

## 0.8386364 0.16136362.2.3 Class imbalances

Imbalanced data can have a significant impact on model predictions and performance (Kuhn and Johnson 2013). Most often this involves classification problems where one class has a very small proportion of observations (e.g., defaults - 5% versus nondefaults - 95%). Several sampling methods have been developed to help remedy class imbalance and most of them can be categorized as either up-sampling or down-sampling.

Down-sampling balances the dataset by reducing the size of the abundant class(es) to match the frequencies in the least prevalent class. This method is used when the quantity of data is sufficient. By keeping all samples in the rare class and randomly selecting an equal number of samples in the abundant class, a balanced new dataset can be retrieved for further modeling. Furthermore, the reduced sample size reduces the computation burden imposed by further steps in the ML process.

On the contrary, up-sampling is used when the quantity of data is insufficient. It tries to balance the dataset by increasing the size of rarer samples. Rather than getting rid of abundant samples, new rare samples are generated by using repetition or bootstrapping (described further in Section 2.4.2).

Note that there is no absolute advantage of one sampling method over another. Application of these two methods depends on the use case it applies to and the data set itself. A combination of over- and under-sampling is often successful and a common approach is known as Synthetic Minority Over-Sampling Technique, or SMOTE (Chawla et al. 2002). This alternative sampling approach, as well as others, can be implemented in R (see the sampling argument in ?caret::trainControl()). Furthermore, many ML algorithms implemented in R have class weighting schemes to remedy imbalances internally (e.g., most h2o algorithms have a weights_column and balance_classes argument).

2.3 Creating models in R

The R ecosystem provides a wide variety of ML algorithm implementations. This makes many powerful algorithms available at your fingertips. Moreover, there are almost always more than one package to perform each algorithm (e.g., there are over 20 packages for fitting random forests). There are pros and cons to this wide selection; some implementations may be more computationally efficient while others may be more flexible (i.e., have more hyperparameter tuning options). Future chapters will expose you to many of the packages and algorithms that perform and scale best to the kinds of tabular data and problems encountered by most organizations.

However, this also has resulted in some drawbacks as there are inconsistencies in how algorithms allow you to define the formula of interest and how the results and predictions are supplied.2 In addition to illustrating the more popular and powerful packages, we’ll also show you how to use implementations that provide more consistency.

2.3.1 Many formula interfaces

To fit a model to our data, the model terms must be specified. Historically, there are two main interfaces for doing this. The formula interface uses R’s formula rules to specify a symbolic representation of the terms. For example, Y ~ X where we say “Y is a function of X”. To illustrate, suppose we have some generic modeling function called model_fn() which accepts an R formula, as in the following examples:

# Sale price as function of neighborhood and year sold

model_fn(Sale_Price ~ Neighborhood + Year_Sold,

data = ames)

# Variables + interactions

model_fn(Sale_Price ~ Neighborhood + Year_Sold +

Neighborhood:Year_Sold, data = ames)

# Shorthand for all predictors

model_fn(Sale_Price ~ ., data = ames)

# Inline functions / transformations

model_fn(log10(Sale_Price) ~ ns(Longitude, df = 3) +

ns(Latitude, df = 3), data = ames)This is very convenient but it has some disadvantages. For example:

- You can’t nest in-line functions such as performing principal components analysis on the feature set prior to executing the model (

model_fn(y ~ pca(scale(x1), scale(x2), scale(x3)), data = df)). - All the model matrix calculations happen at once and can’t be recycled when used in a model function.

- For very wide data sets, the formula method can be extremely inefficient (Kuhn 2017b).

- There are limited roles that variables can take which has led to several re-implementations of formulas.

- Specifying multivariate outcomes is clunky and inelegant.

- Not all modeling functions have a formula method (lack of consistency!).

Some modeling functions have a non-formula (XY) interface. These functions have separate arguments for the predictors and the outcome(s):

# Use separate inputs for X and Y

features <- c("Year_Sold", "Longitude", "Latitude")

model_fn(x = ames[, features], y = ames$Sale_Price)This provides more efficient calculations but can be inconvenient if you have transformations, factor variables, interactions, or any other operations to apply to the data prior to modeling.

Overall, it is difficult to determine if a package has one or both of these interfaces. For example, the lm() function, which performs linear regression, only has the formula method. Consequently, until you are familiar with a particular implementation you will need to continue referencing the corresponding help documentation.

A third interface, is to use variable name specification where we provide all the data combined in one training frame but we specify the features and response with character strings. This is the interface used by the h2o package.

model_fn(

x = c("Year_Sold", "Longitude", "Latitude"),

y = "Sale_Price",

data = ames.h2o

)One approach to get around these inconsistencies is to use a meta engine, which we discuss next.

2.3.2 Many engines

Although there are many individual ML packages available, there is also an abundance of meta engines that can be used to help provide consistency. For example, the following all produce the same linear regression model output:

lm_lm <- lm(Sale_Price ~ ., data = ames)

lm_glm <- glm(Sale_Price ~ ., data = ames,

family = gaussian)

lm_caret <- train(Sale_Price ~ ., data = ames,

method = "lm")Here, lm() and glm() are two different algorithm engines that can be used to fit the linear model and caret::train() is a meta engine (aggregator) that allows you to apply almost any direct engine with method = "<method-name>". There are trade-offs to consider when using direct versus meta engines. For example, using direct engines can allow for extreme flexibility but also requires you to familiarize yourself with the unique differences of each implementation. For example, the following highlights the various syntax nuances required to compute and extract predicted class probabilities across different direct engines.3

| Algorithm | Package | Code |

|---|---|---|

| Linear discriminant analysis | MASS | predict(obj) |

| Generalized linear model | stats | predict(obj, type = "response") |

| Mixture discriminant analysis | mda | predict(obj, type = "posterior") |

| Decision tree | rpart | predict(obj, type = "prob") |

| Random Forest | ranger | predict(obj)$predictions |

| Gradient boosting machine | gbm | predict(obj, type = "response", n.trees) |

Meta engines provide you with more consistency in how you specify inputs and extract outputs but can be less flexible than direct engines. Future chapters will illustrate both approaches. For meta engines, we’ll focus on the caret package in the hardcopy of the book while also demonstrating the newer parsnip package in the additional online resources.4

2.4 Resampling methods

In Section 2.2 we split our data into training and testing sets. Furthermore, we were very explicit about the fact that we do not use the test set to assess model performance during the training phase. So how do we assess the generalization performance of the model?

One option is to assess an error metric based on the training data. Unfortunately, this leads to biased results as some models can perform very well on the training data but not generalize well to a new data set (we’ll illustrate this in Section 2.5).

A second method is to use a validation approach, which involves splitting the training set further to create two parts (as in Section 2.2): a training set and a validation set (or holdout set). We can then train our model(s) on the new training set and estimate the performance on the validation set. Unfortunately, validation using a single holdout set can be highly variable and unreliable unless you are working with very large data sets (Molinaro, Simon, and Pfeiffer 2005; Hawkins, Basak, and Mills 2003). As the size of your data set reduces, this concern increases.

Although we stick to our definitions of test, validation, and holdout sets, these terms are sometimes used interchangeably in other literature and software. What’s important to remember is to always put a portion of the data under lock and key until a final model has been selected (we refer to this as the test data, but others refer to it as the holdout set).

Resampling methods provide an alternative approach by allowing us to repeatedly fit a model of interest to parts of the training data and test its performance on other parts. The two most commonly used resampling methods include k-fold cross validation and bootstrapping.

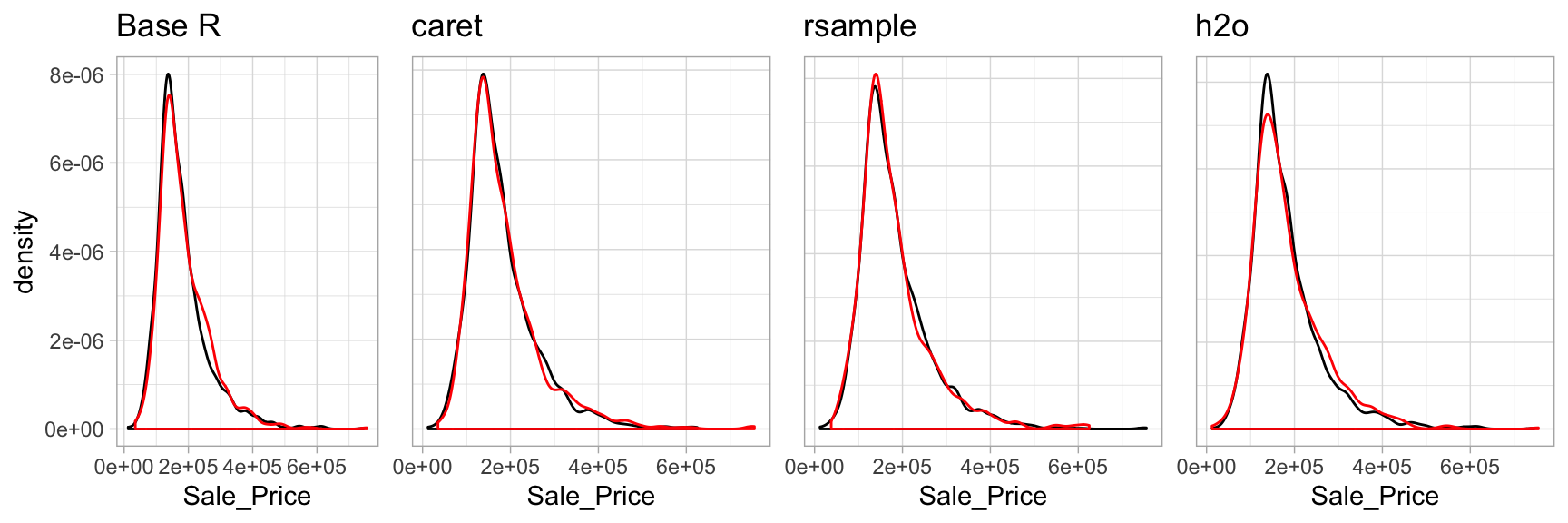

2.4.1 k-fold cross validation

k-fold cross-validation (aka k-fold CV) is a resampling method that randomly divides the training data into k groups (aka folds) of approximately equal size. The model is fit on \(k-1\) folds and then the remaining fold is used to compute model performance. This procedure is repeated k times; each time, a different fold is treated as the validation set. This process results in k estimates of the generalization error (say \(\epsilon_1, \epsilon_2, \dots, \epsilon_k\)). Thus, the k-fold CV estimate is computed by averaging the k test errors, providing us with an approximation of the error we might expect on unseen data.

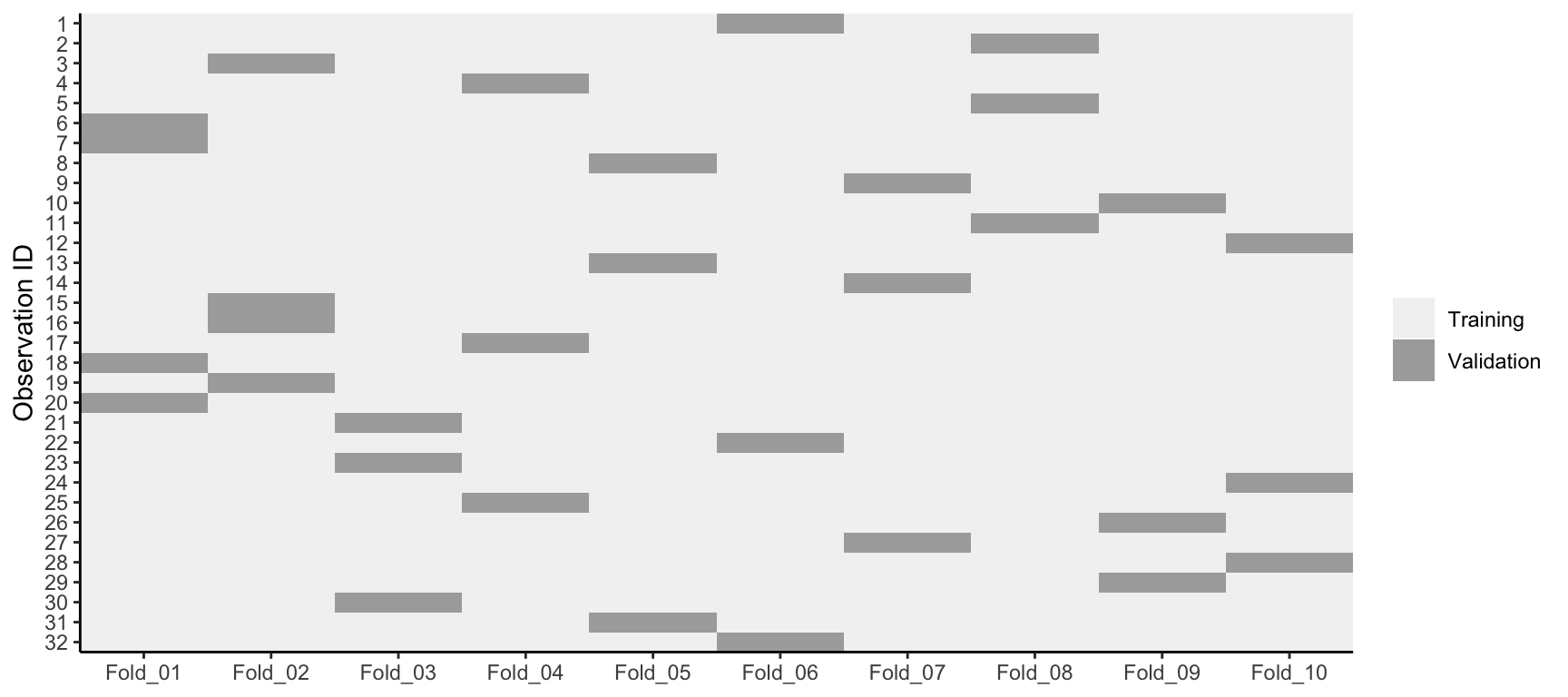

Figure 2.4: Illustration of the k-fold cross validation process.

Consequently, with k-fold CV, every observation in the training data will be held out one time to be included in the test set as illustrated in Figure 2.5. In practice, one typically uses \(k = 5\) or \(k = 10\). There is no formal rule as to the size of k; however, as k gets larger, the difference between the estimated performance and the true performance to be seen on the test set will decrease. On the other hand, using too large k can introduce computational burdens. Moreover, Molinaro, Simon, and Pfeiffer (2005) found that \(k=10\) performed similarly to leave-one-out cross validation (LOOCV) which is the most extreme approach (i.e., setting \(k = n\)).

Figure 2.5: 10-fold cross validation on 32 observations. Each observation is used once for validation and nine times for training.

Although using \(k \geq 10\) helps to minimize the variability in the estimated performance, k-fold CV still tends to have higher variability than bootstrapping (discussed next). Kim (2009) showed that repeating k-fold CV can help to increase the precision of the estimated generalization error. Consequently, for smaller data sets (say \(n < 10,000\)), 10-fold CV repeated 5 or 10 times will improve the accuracy of your estimated performance and also provide an estimate of its variability.

Throughout this book we’ll cover multiple ways to incorporate CV as you can often perform CV directly within certain ML functions:

# Example using h2o

h2o.cv <- h2o.glm(

x = x,

y = y,

training_frame = ames.h2o,

nfolds = 10 # perform 10-fold CV

)Or externally as in the below chunk5. When applying it externally to an ML algorithm as below, we’ll need a process to apply the ML model to each resample, which we’ll also cover.

vfold_cv(ames, v = 10)

## # 10-fold cross-validation

## # A tibble: 10 x 2

## splits id

## <named list> <chr>

## 1 <split [2.6K/293]> Fold01

## 2 <split [2.6K/293]> Fold02

## 3 <split [2.6K/293]> Fold03

## 4 <split [2.6K/293]> Fold04

## 5 <split [2.6K/293]> Fold05

## 6 <split [2.6K/293]> Fold06

## 7 <split [2.6K/293]> Fold07

## 8 <split [2.6K/293]> Fold08

## 9 <split [2.6K/293]> Fold09

## 10 <split [2.6K/293]> Fold102.4.2 Bootstrapping

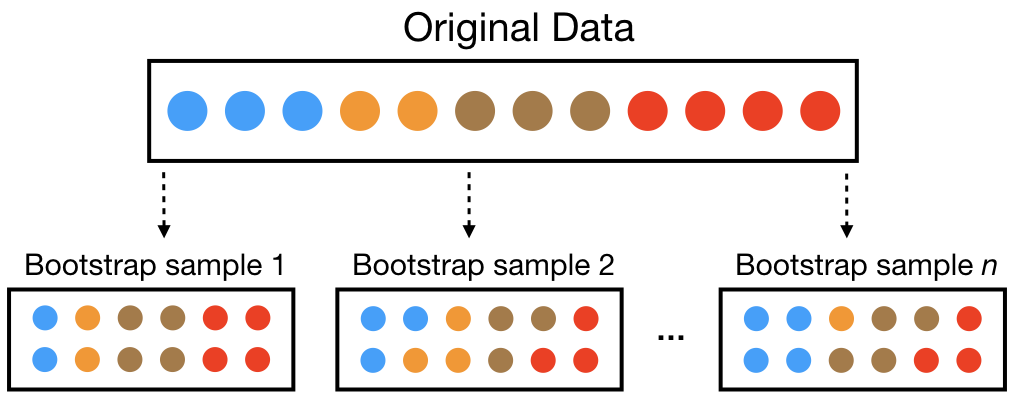

A bootstrap sample is a random sample of the data taken with replacement (Efron and Tibshirani 1986). This means that, after a data point is selected for inclusion in the subset, it’s still available for further selection. A bootstrap sample is the same size as the original data set from which it was constructed. Figure 2.6 provides a schematic of bootstrap sampling where each bootstrap sample contains 12 observations just as in the original data set. Furthermore, bootstrap sampling will contain approximately the same distribution of values (represented by colors) as the original data set.

Figure 2.6: Illustration of the bootstrapping process.

Since samples are drawn with replacement, each bootstrap sample is likely to contain duplicate values. In fact, on average, \(\approx 63.21\)% of the original sample ends up in any particular bootstrap sample. The original observations not contained in a particular bootstrap sample are considered out-of-bag (OOB). When bootstrapping, a model can be built on the selected samples and validated on the OOB samples; this is often done, for example, in random forests (see Chapter 11).

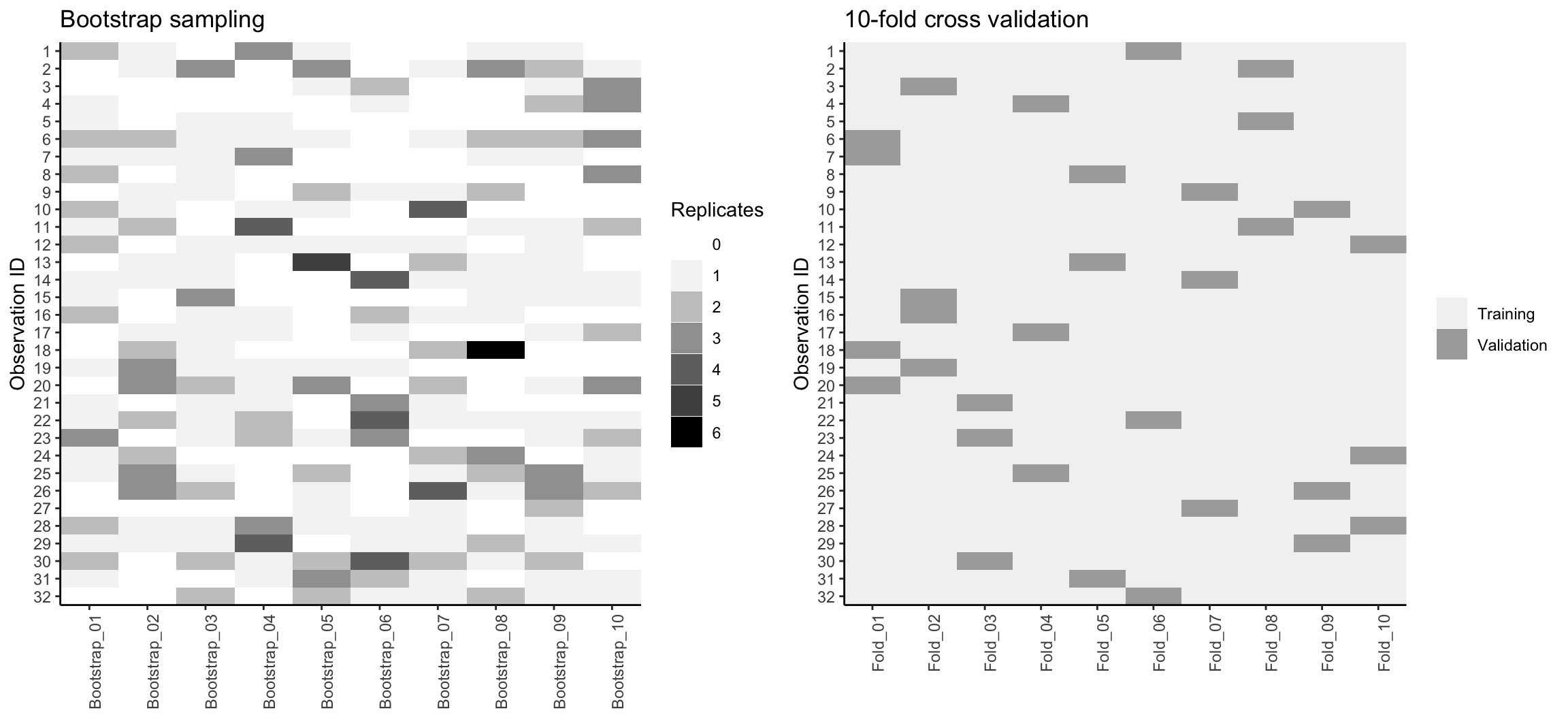

Since observations are replicated in bootstrapping, there tends to be less variability in the error measure compared with k-fold CV (Efron 1983). However, this can also increase the bias of your error estimate. This can be problematic with smaller data sets; however, for most average-to-large data sets (say \(n \geq 1,000\)) this concern is often negligible.

Figure 2.7 compares bootstrapping to 10-fold CV on a small data set with \(n = 32\) observations. A thorough introduction to the bootstrap and its use in R is provided in Davison, Hinkley, and others (1997).

Figure 2.7: Bootstrap sampling (left) versus 10-fold cross validation (right) on 32 observations. For bootstrap sampling, the observations that have zero replications (white) are the out-of-bag observations used for validation.

We can create bootstrap samples easily with rsample::bootstraps(), as illustrated in the code chunk below.

bootstraps(ames, times = 10)

## # Bootstrap sampling

## # A tibble: 10 x 2

## splits id

## <list> <chr>

## 1 <split [2.9K/1.1K]> Bootstrap01

## 2 <split [2.9K/1.1K]> Bootstrap02

## 3 <split [2.9K/1.1K]> Bootstrap03

## 4 <split [2.9K/1K]> Bootstrap04

## 5 <split [2.9K/1.1K]> Bootstrap05

## 6 <split [2.9K/1.1K]> Bootstrap06

## 7 <split [2.9K/1.1K]> Bootstrap07

## 8 <split [2.9K/1.1K]> Bootstrap08

## 9 <split [2.9K/1.1K]> Bootstrap09

## 10 <split [2.9K/1K]> Bootstrap10Bootstrapping is, typically, more of an internal resampling procedure that is naturally built into certain ML algorithms. This will become more apparent in Chapters 10–11 where we discuss bagging and random forests, respectively.

2.4.3 Alternatives

It is important to note that there are other useful resampling procedures. If you’re working with time-series specific data then you will want to incorporate rolling origin and other time series resampling procedures. Hyndman and Athanasopoulos (2018) is the dominant, R-focused, time series resource6.

Additionally, Efron (1983) developed the “632 method” and Efron and Tibshirani (1997) discuss the “632+ method”; both approaches seek to minimize biases experienced with bootstrapping on smaller data sets and are available via caret (see ?caret::trainControl for details).

2.5 Bias variance trade-off

Prediction errors can be decomposed into two important subcomponents: error due to “bias” and error due to “variance”. There is often a tradeoff between a model’s ability to minimize bias and variance. Understanding how different sources of error lead to bias and variance helps us improve the data fitting process resulting in more accurate models.

2.5.1 Bias

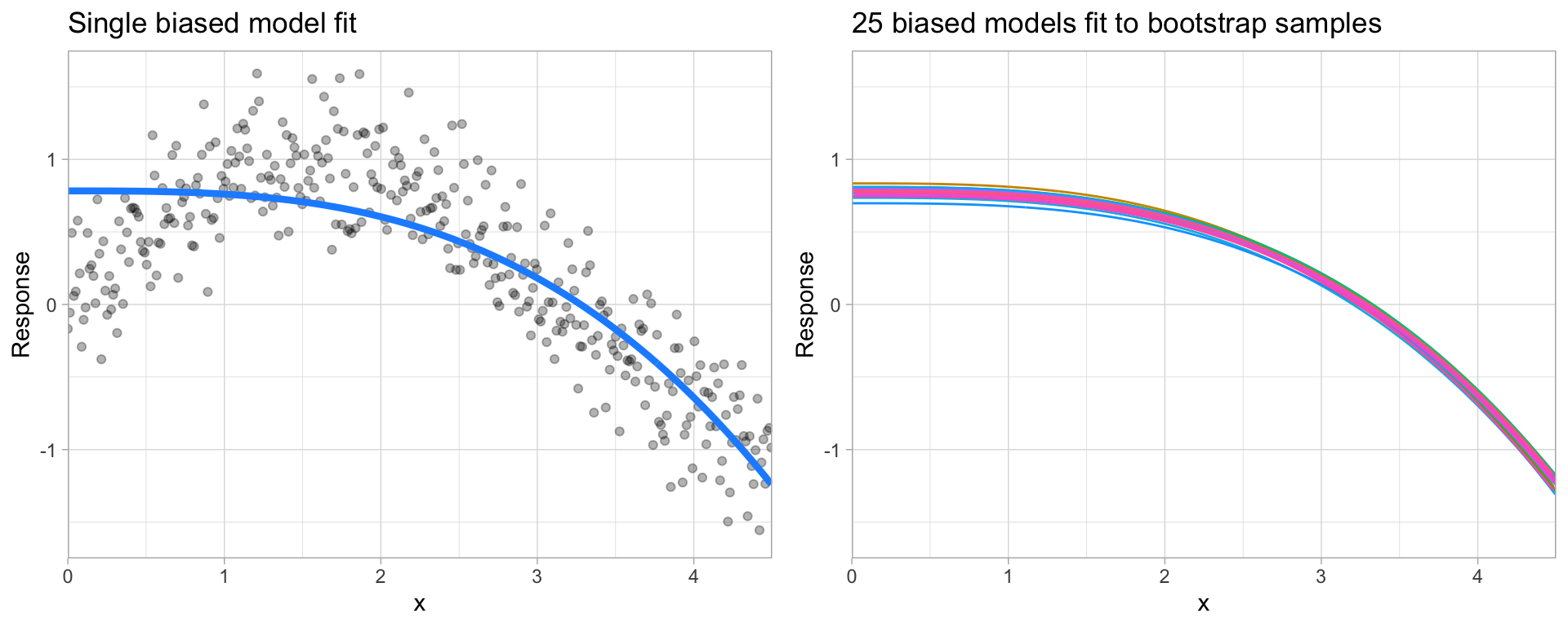

Bias is the difference between the expected (or average) prediction of our model and the correct value which we are trying to predict. It measures how far off in general a model’s predictions are from the correct value, which provides a sense of how well a model can conform to the underlying structure of the data. Figure 2.8 illustrates an example where the polynomial model does not capture the underlying structure well. Linear models are classical examples of high bias models as they are less flexible and rarely capture non-linear, non-monotonic relationships.

We also need to think of bias-variance in relation to resampling. Models with high bias are rarely affected by the noise introduced by resampling. If a model has high bias, it will have consistency in its resampling performance as illustrated by Figure 2.8.

Figure 2.8: A biased polynomial model fit to a single data set does not capture the underlying non-linear, non-monotonic data structure (left). Models fit to 25 bootstrapped replicates of the data are underterred by the noise and generates similar, yet still biased, predictions (right).

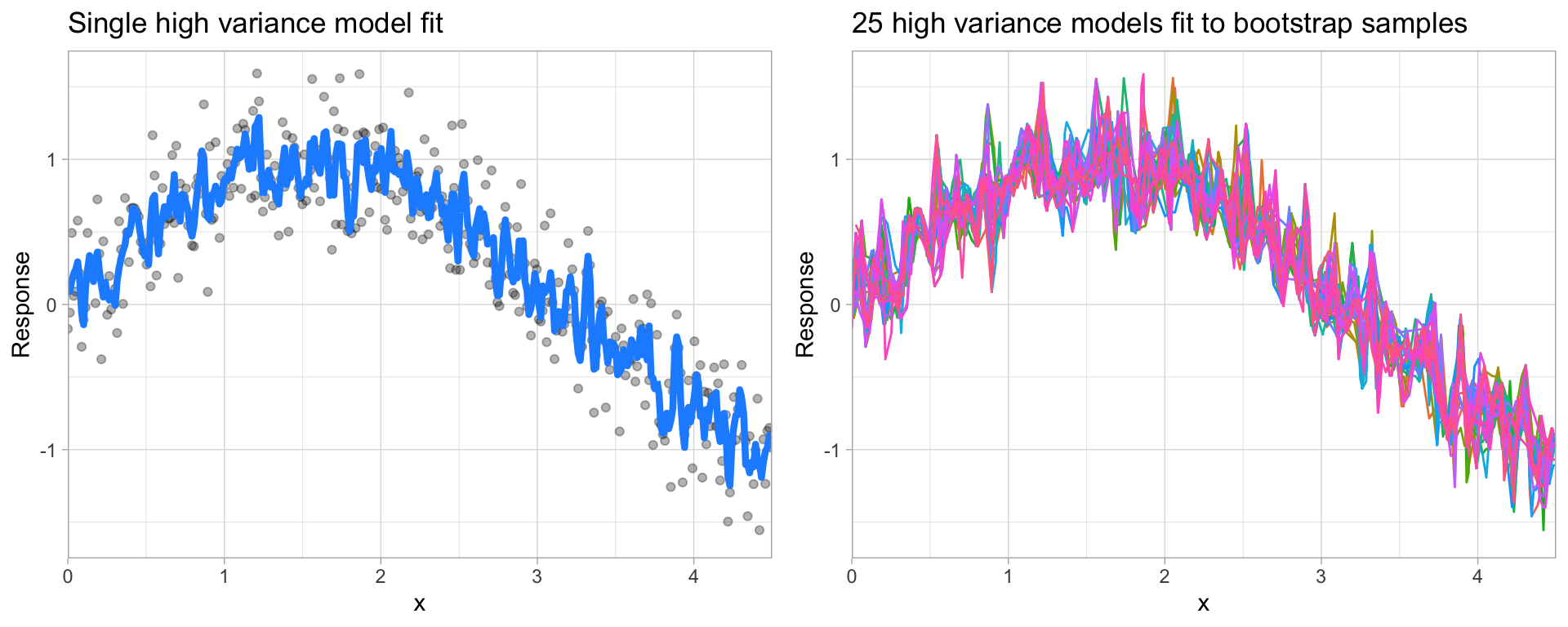

2.5.2 Variance

On the other hand, error due to variance is defined as the variability of a model prediction for a given data point. Many models (e.g., k-nearest neighbor, decision trees, gradient boosting machines) are very adaptable and offer extreme flexibility in the patterns that they can fit to. However, these models offer their own problems as they run the risk of overfitting to the training data. Although you may achieve very good performance on your training data, the model will not automatically generalize well to unseen data.

Figure 2.9: A high variance k-nearest neighbor model fit to a single data set captures the underlying non-linear, non-monotonic data structure well but also overfits to individual data points (left). Models fit to 25 bootstrapped replicates of the data are deterred by the noise and generate highly variable predictions (right).

Since high variance models are more prone to overfitting, using resampling procedures are critical to reduce this risk. Moreover, many algorithms that are capable of achieving high generalization performance have lots of hyperparameters that control the level of model complexity (i.e., the tradeoff between bias and variance).

2.5.3 Hyperparameter tuning

Hyperparameters (aka tuning parameters) are the “knobs to twiddle”7 to control the complexity of machine learning algorithms and, therefore, the bias-variance trade-off. Not all algorithms have hyperparameters (e.g., ordinary least squares8); however, most have at least one or more.

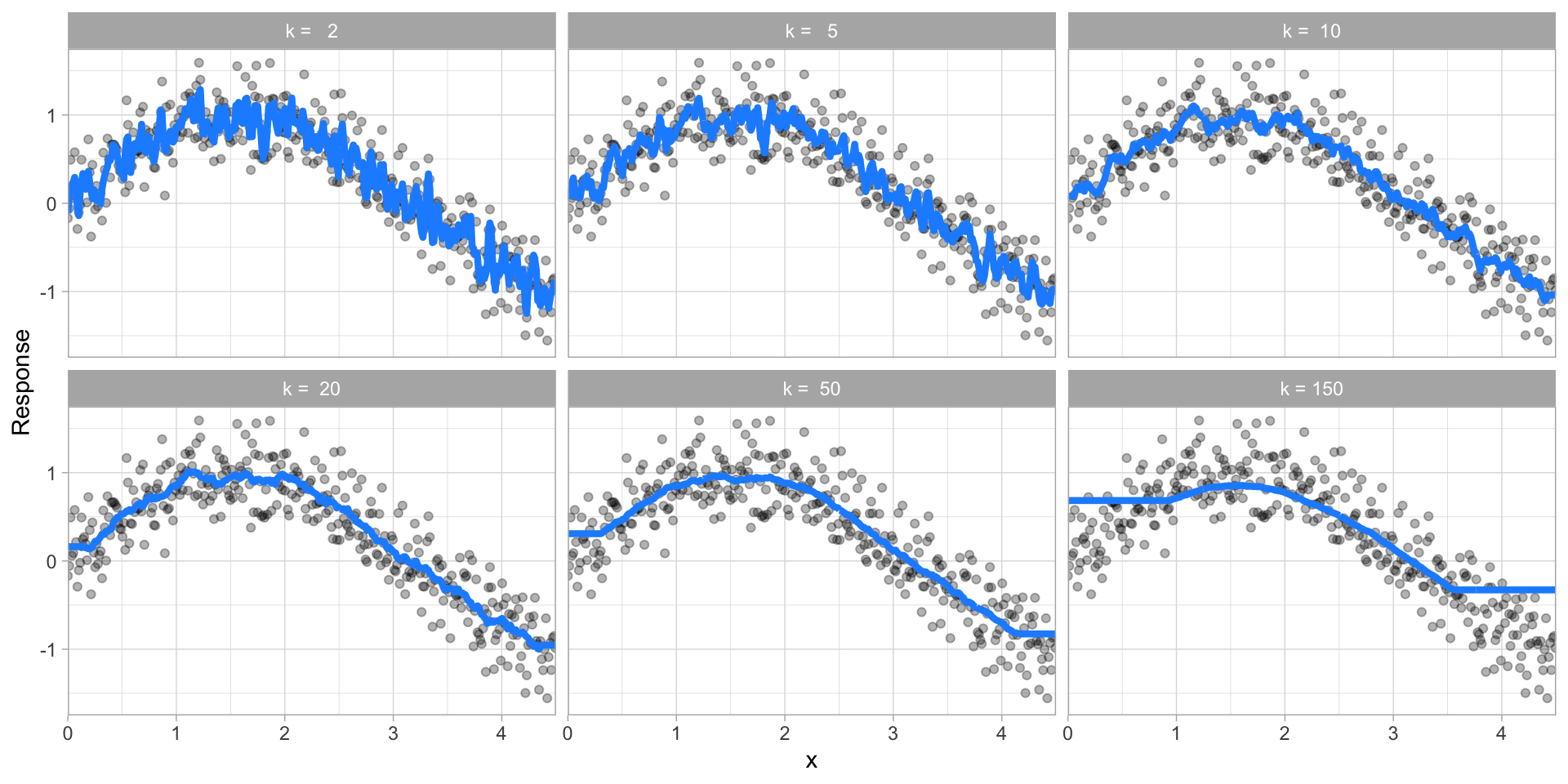

The proper setting of these hyperparameters is often dependent on the data and problem at hand and cannot always be estimated by the training data alone. Consequently, we need a method of identifying the optimal setting. For example, in the high variance example in the previous section, we illustrated a high variance k-nearest neighbor model (we’ll discuss k-nearest neighbor in Chapter 8). k-nearest neighbor models have a single hyperparameter (k) that determines the predicted value to be made based on the k nearest observations in the training data to the one being predicted. If k is small (e.g., \(k=3\)), the model will make a prediction for a given observation based on the average of the response values for the 3 observations in the training data most similar to the observation being predicted. This often results in highly variable predicted values because we are basing the prediction (in this case, an average) on a very small subset of the training data. As k gets bigger, we base our predictions on an average of a larger subset of the training data, which naturally reduces the variance in our predicted values (remember this for later, averaging often helps to reduce variance!). Figure 2.10 illustrates this point. Smaller k values (e.g., 2, 5, or 10) lead to high variance (but lower bias) and larger values (e.g., 150) lead to high bias (but lower variance). The optimal k value might exist somewhere between 20–50, but how do we know which value of k to use?

Figure 2.10: k-nearest neighbor model with differing values for k.

One way to perform hyperparameter tuning is to fiddle with hyperparameters manually until you find a great combination of hyperparameter values that result in high predictive accuracy (as measured using k-fold CV, for instance). However, this can be very tedious work depending on the number of hyperparameters. An alternative approach is to perform a grid search. A grid search is an automated approach to searching across many combinations of hyperparameter values.

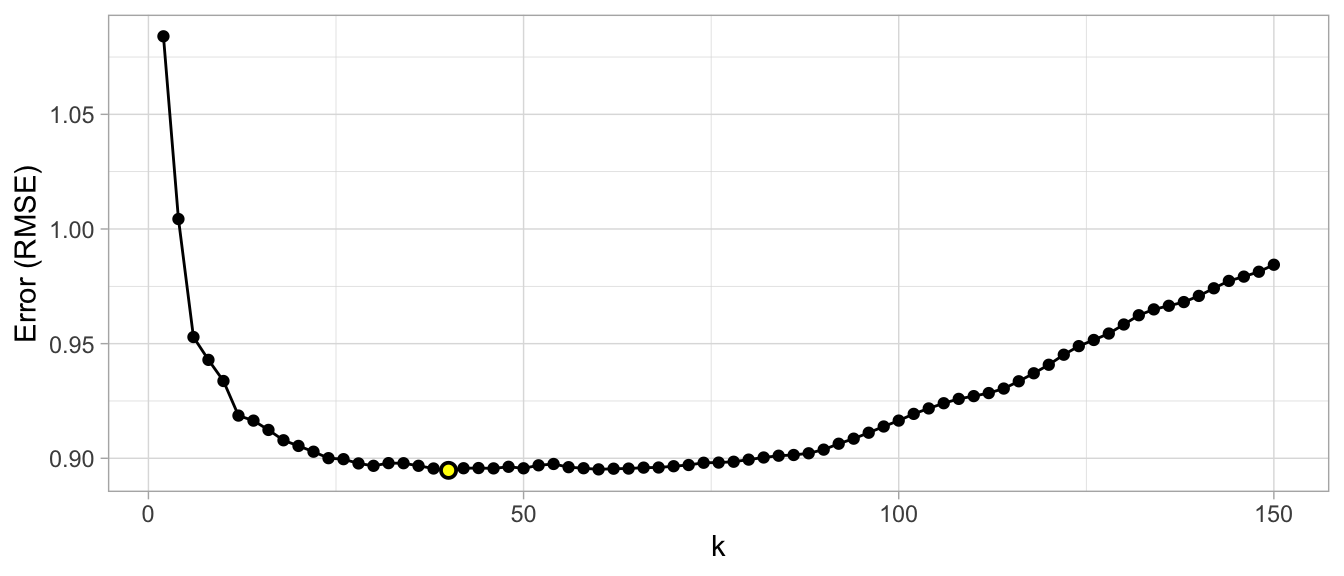

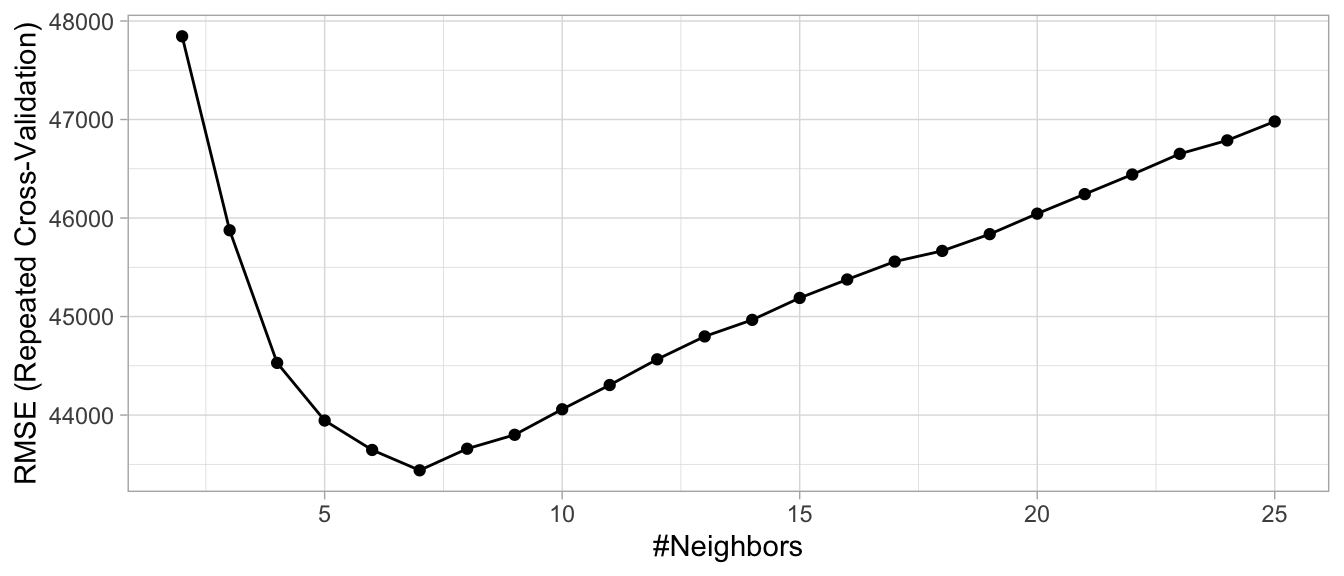

For our k-nearest neighbor example, a grid search would predefine a candidate set of values for k (e.g., \(k = 1, 2, \dots, j\)) and perform a resampling method (e.g., k-fold CV) to estimate which k value generalizes the best to unseen data. Figure 2.11 illustrates the results from a grid search to assess \(k = 2, 12, 14, \dots, 150\) using repeated 10-fold CV. The error rate displayed represents the average error for each value of k across all the repeated CV folds. On average, \(k=46\) was the optimal hyperparameter value to minimize error (in this case, RMSE which is discussed in Section 2.6)) on unseen data.

Figure 2.11: Results from a grid search for a k-nearest neighbor model assessing values for k ranging from 2-150. We see high error values due to high model variance when k is small and we also see high errors values due to high model bias when k is large. The optimal model is found at k = 46.

Throughout this book you’ll be exposed to different approaches to performing grid searches. In the above example, we used a full cartesian grid search, which assesses every hyperparameter value manually defined. However, as models get more complex and offer more hyperparameters, this approach can become computationally burdensome and requires you to define the optimal hyperparameter grid settings to explore. Additional approaches we’ll illustrate include random grid searches (Bergstra and Bengio 2012) which explores randomly selected hyperparameter values from a range of possible values, early stopping which allows you to stop a grid search once reduction in the error stops marginally improving, adaptive resampling via futility analysis (Kuhn 2014) which adaptively resamples candidate hyperparameter values based on approximately optimal performance, and more.

2.6 Model evaluation

Historically, the performance of statistical models was largely based on goodness-of-fit tests and assessment of residuals. Unfortunately, misleading conclusions may follow from predictive models that pass these kinds of assessments (Breiman and others 2001). Today, it has become widely accepted that a more sound approach to assessing model performance is to assess the predictive accuracy via loss functions. Loss functions are metrics that compare the predicted values to the actual value (the output of a loss function is often referred to as the error or pseudo residual). When performing resampling methods, we assess the predicted values for a validation set compared to the actual target value. For example, in regression, one way to measure error is to take the difference between the actual and predicted value for a given observation (this is the usual definition of a residual in ordinary linear regression). The overall validation error of the model is computed by aggregating the errors across the entire validation data set.

There are many loss functions to choose from when assessing the performance of a predictive model, each providing a unique understanding of the predictive accuracy and differing between regression and classification models. Furthermore, the way a loss function is computed will tend to emphasize certain types of errors over others and can lead to drastic differences in how we interpret the “optimal model”. Its important to consider the problem context when identifying the preferred performance metric to use. And when comparing multiple models, we need to compare them across the same metric.

2.6.1 Regression models

MSE: Mean squared error is the average of the squared error (\(MSE = \frac{1}{n} \sum^n_{i=1}(y_i - \hat y_i)^2\))9. The squared component results in larger errors having larger penalties. This (along with RMSE) is the most common error metric to use. Objective: minimize

RMSE: Root mean squared error. This simply takes the square root of the MSE metric (\(RMSE = \sqrt{\frac{1}{n} \sum^n_{i=1}(y_i - \hat y_i)^2}\)) so that your error is in the same units as your response variable. If your response variable units are dollars, the units of MSE are dollars-squared, but the RMSE will be in dollars. Objective: minimize

Deviance: Short for mean residual deviance. In essence, it provides a degree to which a model explains the variation in a set of data when using maximum likelihood estimation. Essentially this compares a saturated model (i.e. fully featured model) to an unsaturated model (i.e. intercept only or average). If the response variable distribution is Gaussian, then it will be approximately equal to MSE. When not, it usually gives a more useful estimate of error. Deviance is often used with classification models.10 Objective: minimize

MAE: Mean absolute error. Similar to MSE but rather than squaring, it just takes the mean absolute difference between the actual and predicted values (\(MAE = \frac{1}{n} \sum^n_{i=1}(\vert y_i - \hat y_i \vert)\)). This results in less emphasis on larger errors than MSE. Objective: minimize

RMSLE: Root mean squared logarithmic error. Similar to RMSE but it performs a

log()on the actual and predicted values prior to computing the difference (\(RMSLE = \sqrt{\frac{1}{n} \sum^n_{i=1}(log(y_i + 1) - log(\hat y_i + 1))^2}\)). When your response variable has a wide range of values, large response values with large errors can dominate the MSE/RMSE metric. RMSLE minimizes this impact so that small response values with large errors can have just as meaningful of an impact as large response values with large errors. Objective: minimize\(R^2\): This is a popular metric that represents the proportion of the variance in the dependent variable that is predictable from the independent variable(s). Unfortunately, it has several limitations. For example, two models built from two different data sets could have the exact same RMSE but if one has less variability in the response variable then it would have a lower \(R^2\) than the other. You should not place too much emphasis on this metric. Objective: maximize

Most models we assess in this book will report most, if not all, of these metrics. We will emphasize MSE and RMSE but it’s important to realize that certain situations warrant emphasis on some metrics more than others.

2.6.2 Classification models

Misclassification: This is the overall error. For example, say you are predicting 3 classes ( high, medium, low ) and each class has 25, 30, 35 observations respectively (90 observations total). If you misclassify 3 observations of class high, 6 of class medium, and 4 of class low, then you misclassified 13 out of 90 observations resulting in a 14% misclassification rate. Objective: minimize

Mean per class error: This is the average error rate for each class. For the above example, this would be the mean of \(\frac{3}{25}, \frac{6}{30}, \frac{4}{35}\), which is 14.5%. If your classes are balanced this will be identical to misclassification. Objective: minimize

MSE: Mean squared error. Computes the distance from 1.0 to the probability suggested. So, say we have three classes, A, B, and C, and your model predicts a probability of 0.91 for A, 0.07 for B, and 0.02 for C. If the correct answer was A the \(MSE = 0.09^2 = 0.0081\), if it is B \(MSE = 0.93^2 = 0.8649\), if it is C \(MSE = 0.98^2 = 0.9604\). The squared component results in large differences in probabilities for the true class having larger penalties. Objective: minimize

Cross-entropy (aka Log Loss or Deviance): Similar to MSE but it incorporates a log of the predicted probability multiplied by the true class. Consequently, this metric disproportionately punishes predictions where we predict a small probability for the true class, which is another way of saying having high confidence in the wrong answer is really bad. Objective: minimize

Gini index: Mainly used with tree-based methods and commonly referred to as a measure of purity where a small value indicates that a node contains predominantly observations from a single class. Objective: minimize

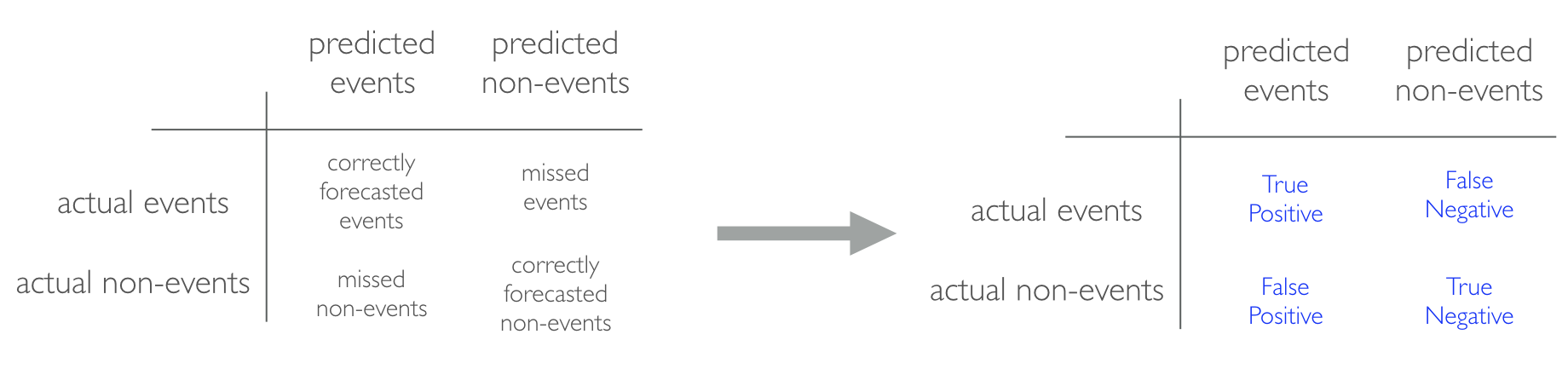

When applying classification models, we often use a confusion matrix to evaluate certain performance measures. A confusion matrix is simply a matrix that compares actual categorical levels (or events) to the predicted categorical levels. When we predict the right level, we refer to this as a true positive. However, if we predict a level or event that did not happen this is called a false positive (i.e. we predicted a customer would redeem a coupon and they did not). Alternatively, when we do not predict a level or event and it does happen that this is called a false negative (i.e. a customer that we did not predict to redeem a coupon does).

Figure 2.12: Confusion matrix and relationships to terms such as true-positive and false-negative.

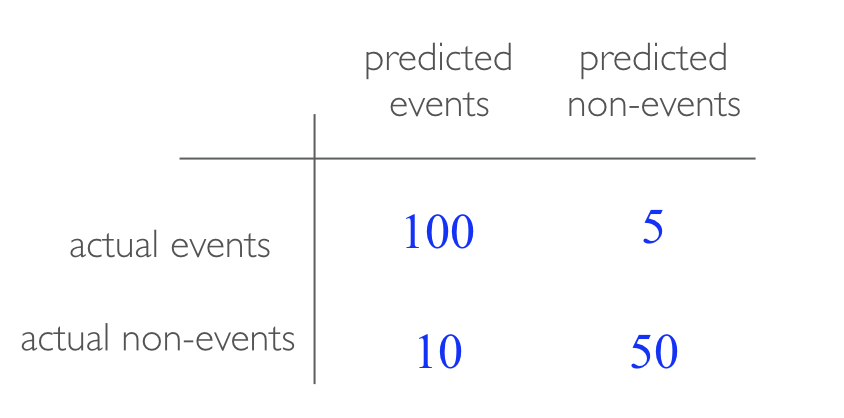

We can extract different levels of performance for binary classifiers. For example, given the classification (or confusion) matrix illustrated in Figure 2.13 we can assess the following:

Accuracy: Overall, how often is the classifier correct? Opposite of misclassification above. Example: \(\frac{TP + TN}{total} = \frac{100+50}{165} = 0.91\). Objective: maximize

Precision: How accurately does the classifier predict events? This metric is concerned with maximizing the true positives to false positive ratio. In other words, for the number of predictions that we made, how many were correct? Example: \(\frac{TP}{TP + FP} = \frac{100}{100+10} = 0.91\). Objective: maximize

Sensitivity (aka recall): How accurately does the classifier classify actual events? This metric is concerned with maximizing the true positives to false negatives ratio. In other words, for the events that occurred, how many did we predict? Example: \(\frac{TP}{TP + FN} = \frac{100}{100+5} = 0.95\). Objective: maximize

Specificity: How accurately does the classifier classify actual non-events? Example: \(\frac{TN}{TN + FP} = \frac{50}{50+10} = 0.83\). Objective: maximize

Figure 2.13: Example confusion matrix.

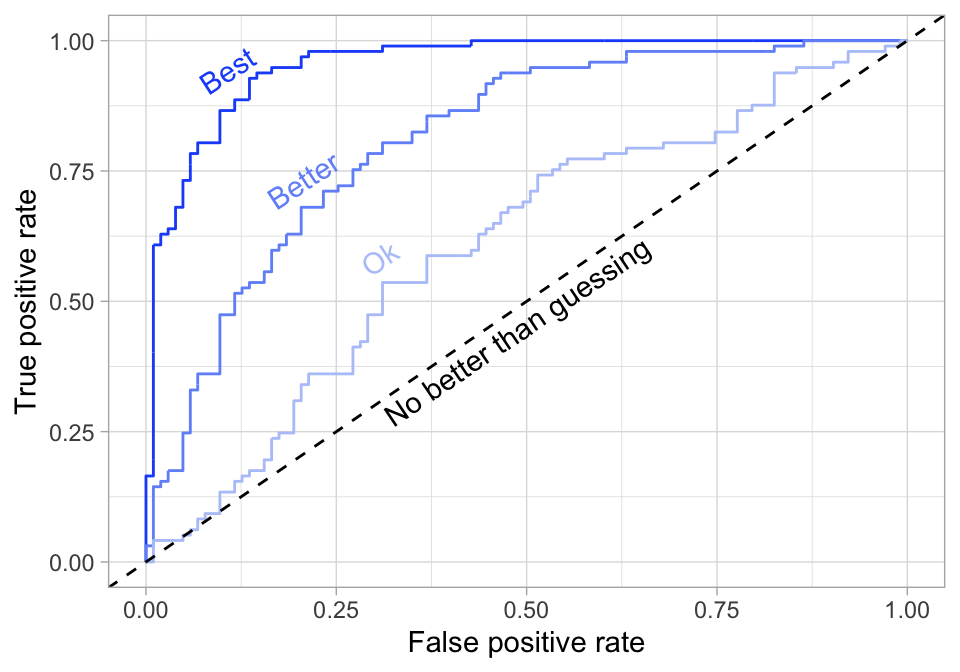

- AUC: Area under the curve. A good binary classifier will have high precision and sensitivity. This means the classifier does well when it predicts an event will and will not occur, which minimizes false positives and false negatives. To capture this balance, we often use a ROC curve that plots the false positive rate along the x-axis and the true positive rate along the y-axis. A line that is diagonal from the lower left corner to the upper right corner represents a random guess. The higher the line is in the upper left-hand corner, the better. AUC computes the area under this curve. Objective: maximize

Figure 2.14: ROC curve.

2.7 Putting the processes together

To illustrate how this process works together via R code, let’s do a simple assessment on the ames housing data. First, we perform stratified sampling as illustrated in Section 2.2.2 to break our data into training vs. test data while ensuring we have consistent distributions between the training and test sets.

# Stratified sampling with the rsample package

set.seed(123)

split <- initial_split(ames, prop = 0.7,

strata = "Sale_Price")

ames_train <- training(split)

ames_test <- testing(split)Next, we’re going to apply a k-nearest neighbor regressor to our data. To do so, we’ll use caret, which is a meta-engine to simplify the resampling, grid search, and model application processes. The following defines:

- Resampling method: we use 10-fold CV repeated 5 times.

- Grid search: we specify the hyperparameter values to assess (\(k = 2, 3, 4, \dots, 25\)).

- Model training & Validation: we train a k-nearest neighbor (

method = "knn") model using our pre-specified resampling procedure (trControl = cv), grid search (tuneGrid = hyper_grid), and preferred loss function (metric = "RMSE").

This grid search takes approximately 3.5 minutes

# Specify resampling strategy

cv <- trainControl(

method = "repeatedcv",

number = 10,

repeats = 5

)

# Create grid of hyperparameter values

hyper_grid <- expand.grid(k = seq(2, 25, by = 1))

# Tune a knn model using grid search

knn_fit <- train(

Sale_Price ~ .,

data = ames_train,

method = "knn",

trControl = cv,

tuneGrid = hyper_grid,

metric = "RMSE"

)Looking at our results we see that the best model coincided with \(k=\) 7, which resulted in an RMSE of 43439.07. This implies that, on average, our model mispredicts the expected sale price of a home by $43,439. Figure 2.15 illustrates the cross-validated error rate across the spectrum of hyperparameter values that we specified.

# Print and plot the CV results

knn_fit

## k-Nearest Neighbors

##

## 2053 samples

## 80 predictor

##

## No pre-processing

## Resampling: Cross-Validated (10 fold, repeated 5 times)

## Summary of sample sizes: 1848, 1848, 1848, 1847, 1849, 1847, ...

## Resampling results across tuning parameters:

##

## k RMSE Rsquared MAE

## 2 47844.53 0.6538046 31002.72

## 3 45875.79 0.6769848 29784.69

## 4 44529.50 0.6949240 28992.48

## 5 43944.65 0.7026947 28738.66

## 6 43645.76 0.7079683 28553.50

## 7 43439.07 0.7129916 28617.80

## 8 43658.35 0.7123254 28769.16

## 9 43799.74 0.7128924 28905.50

## 10 44058.76 0.7108900 29061.68

## 11 44304.91 0.7091949 29197.78

## 12 44565.82 0.7073437 29320.81

## 13 44798.10 0.7056491 29475.33

## 14 44966.27 0.7051474 29561.70

## 15 45188.86 0.7036000 29731.56

## 16 45376.09 0.7027152 29860.67

## 17 45557.94 0.7016254 29974.44

## 18 45666.30 0.7021351 30018.59

## 19 45836.33 0.7013026 30105.50

## 20 46044.44 0.6997198 30235.80

## 21 46242.59 0.6983978 30367.95

## 22 46441.87 0.6969620 30481.48

## 23 46651.66 0.6953968 30611.48

## 24 46788.22 0.6948738 30681.97

## 25 46980.13 0.6928159 30777.25

##

## RMSE was used to select the optimal model using the smallest value.

## The final value used for the model was k = 7.

ggplot(knn_fit)

Figure 2.15: Results from a grid search for a k-nearest neighbor model on the Ames housing data assessing values for k ranging from 2-25.

The question remains: “Is this the best predictive model we can find?” We may have identified the optimal k-nearest neighbor model for our given data set, but this doesn’t mean we’ve found the best possible overall model. Nor have we considered potential feature and target engineering options. The remainder of this book will walk you through the journey of identifying alternative solutions and, hopefully, a much more optimal model.

References

Bergstra, James, and Yoshua Bengio. 2012. “Random Search for Hyper-Parameter Optimization.” Journal of Machine Learning Research 13 (Feb): 281–305.

Breiman, Leo, and others. 2001. “Statistical Modeling: The Two Cultures (with Comments and a Rejoinder by the Author).” Statistical Science 16 (3). Institute of Mathematical Statistics: 199–231.

Chawla, Nitesh V, Kevin W Bowyer, Lawrence O Hall, and W Philip Kegelmeyer. 2002. “SMOTE: Synthetic Minority over-Sampling Technique.” Journal of Artificial Intelligence Research 16: 321–57.

Davison, Anthony Christopher, David Victor Hinkley, and others. 1997. Bootstrap Methods and Their Application. Vol. 1. Cambridge University Press.

Efron, Bradley. 1983. “Estimating the Error Rate of a Prediction Rule: Improvement on Cross-Validation.” Journal of the American Statistical Association 78 (382). Taylor & Francis: 316–31.

Efron, Bradley, and Robert Tibshirani. 1986. “Bootstrap Methods for Standard Errors, Confidence Intervals, and Other Measures of Statistical Accuracy.” Statistical Science. JSTOR, 54–75.

Efron, Bradley, and Robert Tibshirani. 1997. “Improvements on Cross-Validation: The 632+ Bootstrap Method.” Journal of the American Statistical Association 92 (438). Taylor & Francis: 548–60.

Hawkins, Douglas M, Subhash C Basak, and Denise Mills. 2003. “Assessing Model Fit by Cross-Validation.” Journal of Chemical Information and Computer Sciences 43 (2). ACS Publications: 579–86.

Hyndman, Rob J, and George Athanasopoulos. 2018. Forecasting: Principles and Practice. OTexts.

Kim, Ji-Hyun. 2009. “Estimating Classification Error Rate: Repeated Cross-Validation, Repeated Hold-Out and Bootstrap.” Computational Statistics & Data Analysis 53 (11). Elsevier: 3735–45.

Kuhn, Max. 2014. “Futility Analysis in the Cross-Validation of Machine Learning Models.” arXiv Preprint arXiv:1405.6974.

Kuhn, Max. 2017b. “The R Formula Method: The Bad Parts.” R Views. https://rviews.rstudio.com/2017/03/01/the-r-formula-method-the-bad-parts/.

Kuhn, Max, and Kjell Johnson. 2013. Applied Predictive Modeling. Vol. 26. Springer.

Molinaro, Annette M, Richard Simon, and Ruth M Pfeiffer. 2005. “Prediction Error Estimation: A Comparison of Resampling Methods.” Bioinformatics 21 (15). Oxford University Press: 3301–7.

Wolpert, David H. 1996. “The Lack of a Priori Distinctions Between Learning Algorithms.” Neural Computation 8 (7). MIT Press: 1341–90.

See https://www.fatml.org/resources/relevant-scholarship for many discussions regarding implications of poorly applied and interpreted ML.↩

Many of these drawbacks and inconsistencies were originally organized and presented by Kuhn (2018).↩

The caret package has been the preferred meta engine over the years; however, the author is now transitioning to full-time development on parsnip, which is designed to be a more robust and tidy meta engine.↩

rsample::vfold_cv()results in a nested data frame where each element insplitsis a list containing the training data frame and the observation IDs that will be used for training the model vs. model validation.↩See their open source book at https://www.otexts.org/fpp2↩

This phrase comes from Brad Efron’s comments in Breiman and others (2001)↩

At least in the ordinary sense. You could think of polynomial regression as having a single hyperparameter, the degree of the polynomial.↩

This deviates slightly from the usual definition of MSE in ordinary linear regression, where we divide by \(n-p\) (to adjust for bias) as opposed to \(n\).↩

See this StackExchange thread (http://bit.ly/what-is-deviance) for a good overview of deviance for different models and in the context of regression versus classification.↩